Wald and Gamma Regression (Australian insurance claims 2004-2005)¶

[1]:

import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

[2]:

az.style.use("arviz-darkgrid")

np.random.seed(1234)

Load and examine Vehicle insurance data¶

In this notebook we use a data set consisting of 67856 insurance policies and 4624 (6.8%) claims in Australia between 2004 and 2005. The original source of this dataset is the book Generalized Linear Models for Insurance Data by Piet de Jong and Gillian Z. Heller.

[3]:

data = bmb.load_data("carclaims")

data.head()

[3]:

| veh_value | exposure | clm | numclaims | claimcst0 | veh_body | veh_age | gender | area | agecat | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.06 | 0.303901 | 0 | 0 | 0.0 | HBACK | 3 | F | C | 2 |

| 1 | 1.03 | 0.648871 | 0 | 0 | 0.0 | HBACK | 2 | F | A | 4 |

| 2 | 3.26 | 0.569473 | 0 | 0 | 0.0 | UTE | 2 | F | E | 2 |

| 3 | 4.14 | 0.317591 | 0 | 0 | 0.0 | STNWG | 2 | F | D | 2 |

| 4 | 0.72 | 0.648871 | 0 | 0 | 0.0 | HBACK | 4 | F | C | 2 |

Let’s see the meaning of the variables before creating any plot or fitting any model.

veh_value: Vehicle value, ranges from \$0 to \$350,000.

exposure: Proportion of the year where the policy was exposed. In practice each policy is not exposed for the full year. Some policies come into force partly into the year while others are canceled before the year’s end.

clm: Claim occurrence. 0 (no), 1 (yes).

numclaims: Number of claims.

claimcst0: Claim amount. 0 if no claim. Ranges from \$200 to \$55922.

veh_body: Vehicle body type. Can be one of bus, convertible, coupe, hatchback, hardtop, motorized caravan/combi, minibus, panel van, roadster, sedan, station wagon, truck, and utility.

veh_age: Vehicle age. 1 (new), 2, 3, and 4.

gender: Gender of the driver. M (Male) and F (Female).

area: Driver’s area of residence. Can be one of A, B, C, D, E, and F.

agecat: Driver’s age category. 1 (youngest), 2, 3, 4, 5, and 6.

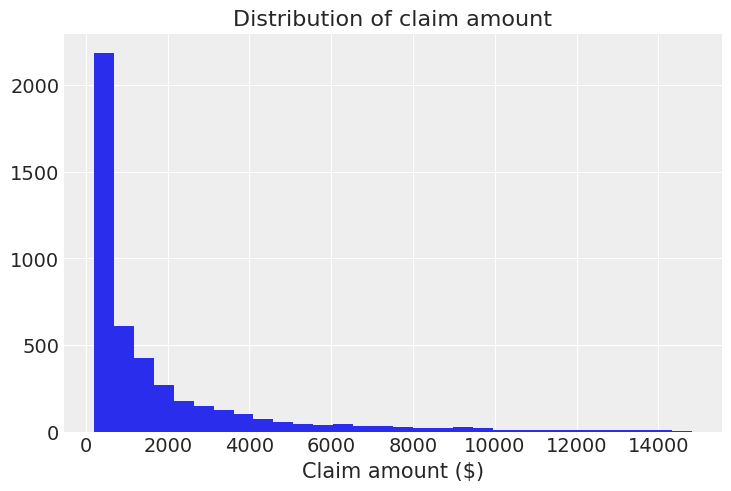

The variable of interest is the claim amount, given by "claimcst0". We keep the records where there is a claim, so claim amount is greater than 0.

[4]:

data = data[data["claimcst0"] > 0]

For clarity, we only show those claims amounts below \$15,000, since there are only 65 records above that threshold.

[5]:

data[data["claimcst0"] > 15000].shape[0]

[5]:

65

[6]:

plt.hist(data[data["claimcst0"] <= 15000]["claimcst0"], bins=30)

plt.title("Distribution of claim amount")

plt.xlabel("Claim amount ($)");

And this is when you say: “Oh, there really are ugly right-skewed distributions out there!”. Well, yes, we’ve all been there :)

In this case we are going to fit GLMs with a right-skewed distribution for the random component. This time we will be using Wald and Gamma distributions. One of their differences is that the variance is proportional to the cubic mean in the case of the Wald distribution, and proportional to the squared mean in the case of the Gamma distribution.

Wald family¶

The Wald family (a.k.a inverse Gaussian model) states that

where the pdf of a Wald distribution is given by

for \(x > 0\), mean \(\mu > 0\) and \(\lambda > 0\) is the shape parameter. The variance is given by \(\sigma^2 = \mu^3/\lambda\). The canonical link is \(g(\mu_i) = \mu_i^{-2}\), but \(g(\mu_i) = \log(\mu_i)\) is usually preferred, and it is what we use here.

Gamma family¶

The default parametrization of the Gamma density function is

where \(x > 0\), and \(\alpha > 0\) and \(\beta > 0\) are the shape and rate parameters, respectively.

But GLMs model the mean of the function, so we need to use an alternative parametrization where

and thus we have

where \(\alpha\) is the shape parameter in the original parametrization of the gamma pdf. The canonical link is \(g(\mu_i) = \mu_i^{-1}\), but here we use \(g(\mu_i) = \log(\mu_i)\) again.

Model fit¶

In this example we are going to use the binned age, the gender, and the area of residence to predict the amount of the claim, conditional on the existence of the claim because we are only working with observations where there is a claim.

"agecat" is interpreted as a numeric variable in our data frame, but we know it is categorical, and we wouldn’t be happy if our model takes it as if it was numeric, would we?

We have two alternatives to tell Bambi that this numeric variable must be treated as categorical. The first one is to wrap the name of the variable with C(), and the other is to pass the same name to the categorical argument when we create the model. We are going to use the first approach with the Wald family and the second with the Gamma.

The C() notation is taken from Patsy and is encouraged when you want to explicitly pass the order of the levels of the variables. If you are happy with the default order, better pass the name to categorical so tables and plots have prettier labels :)

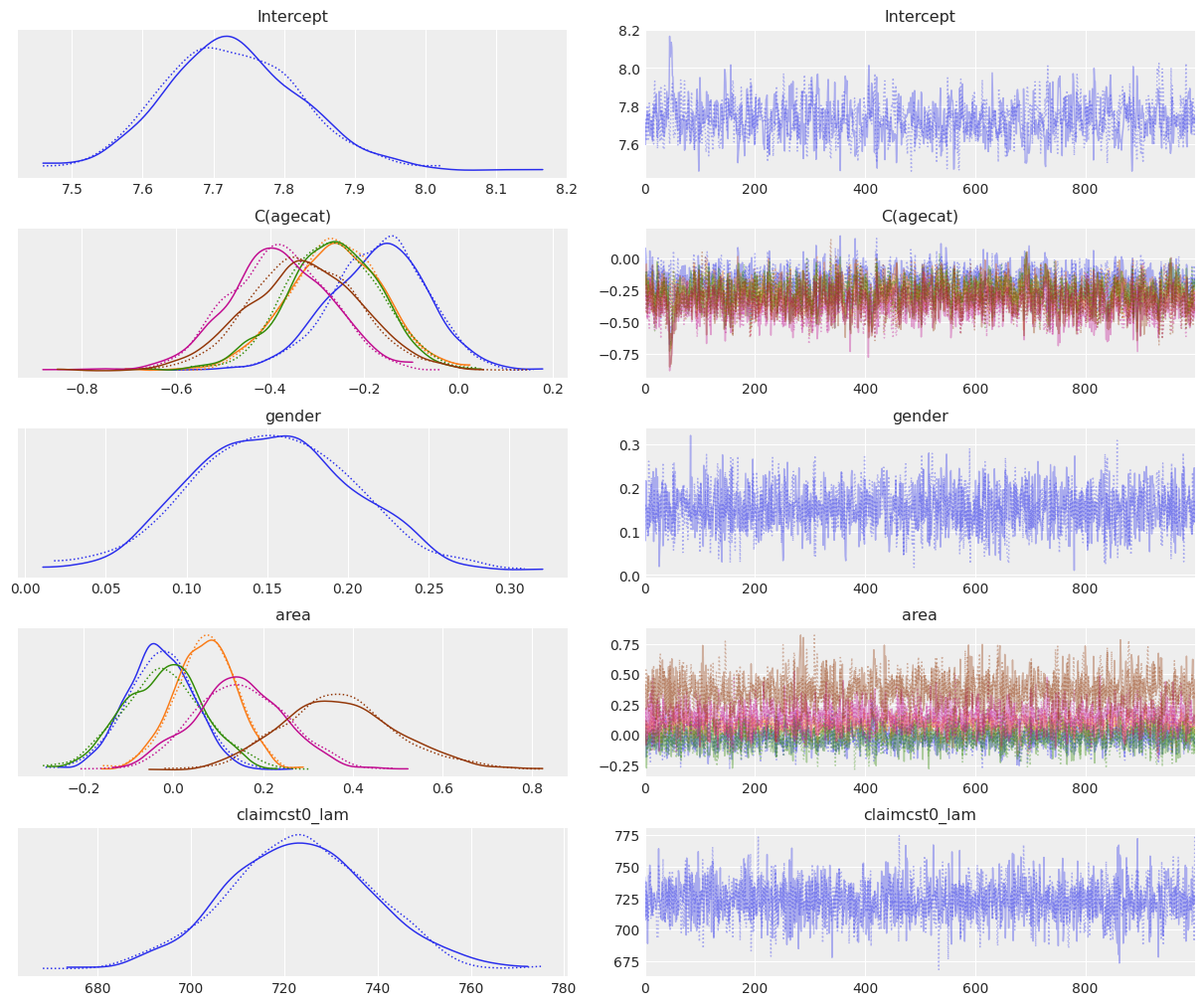

Wald¶

[7]:

model_wald = bmb.Model("claimcst0 ~ C(agecat) + gender + area", data, family = "wald", link = "log")

fitted_wald = model_wald.fit(tune=2000, target_accept=0.9)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [claimcst0_lam, Intercept, area, gender, C(agecat)]

Sampling 2 chains for 2_000 tune and 1_000 draw iterations (4_000 + 2_000 draws total) took 19 seconds.

[8]:

az.plot_trace(fitted_wald);

[9]:

az.summary(fitted_wald)

[9]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 7.728 | 0.098 | 7.547 | 7.914 | 0.004 | 0.003 | 706.0 | 1022.0 | 1.0 |

| C(agecat)[2] | -0.171 | 0.103 | -0.362 | 0.017 | 0.004 | 0.003 | 688.0 | 958.0 | 1.0 |

| C(agecat)[3] | -0.263 | 0.101 | -0.452 | -0.078 | 0.004 | 0.003 | 679.0 | 1024.0 | 1.0 |

| C(agecat)[4] | -0.268 | 0.100 | -0.448 | -0.076 | 0.004 | 0.003 | 710.0 | 813.0 | 1.0 |

| C(agecat)[5] | -0.382 | 0.107 | -0.580 | -0.189 | 0.004 | 0.003 | 896.0 | 912.0 | 1.0 |

| C(agecat)[6] | -0.326 | 0.118 | -0.541 | -0.104 | 0.004 | 0.003 | 855.0 | 743.0 | 1.0 |

| gender[M] | 0.153 | 0.049 | 0.066 | 0.243 | 0.001 | 0.001 | 2491.0 | 1457.0 | 1.0 |

| area[B] | -0.029 | 0.075 | -0.180 | 0.101 | 0.002 | 0.001 | 1704.0 | 1693.0 | 1.0 |

| area[C] | 0.072 | 0.068 | -0.061 | 0.197 | 0.002 | 0.001 | 1310.0 | 1419.0 | 1.0 |

| area[D] | -0.023 | 0.091 | -0.183 | 0.155 | 0.002 | 0.002 | 1384.0 | 1617.0 | 1.0 |

| area[E] | 0.148 | 0.103 | -0.033 | 0.351 | 0.002 | 0.002 | 1818.0 | 1570.0 | 1.0 |

| area[F] | 0.372 | 0.130 | 0.140 | 0.628 | 0.003 | 0.002 | 1756.0 | 1364.0 | 1.0 |

| claimcst0_lam | 723.235 | 15.547 | 692.984 | 751.526 | 0.312 | 0.221 | 2494.0 | 1460.0 | 1.0 |

If we look at the agecat variable, we can see the log mean of the claim amount tends to decrease when the age of the person increases, with the exception of the last category where we can see a slight increase in the mean of the coefficient (-0.307 vs -0.365 of the previous category). However, these differences only represent a slight tendency because of the large overlap between the marginal posteriors for these coefficients (see overlayed density plots for C(agecat).

The posterior for gender tells us that the claim amount tends to be larger for males than for females, with the mean being 0.153 and the credible interval ranging from 0.054 to 0.246.

Finally, from the marginal posteriors for the areas, we can see that F is the only area that clearly stands out, with a higher mean claim amount than in the rest. Area E may also have a higher claim amount, but this difference with the other areas is not as evident as it happens with F.

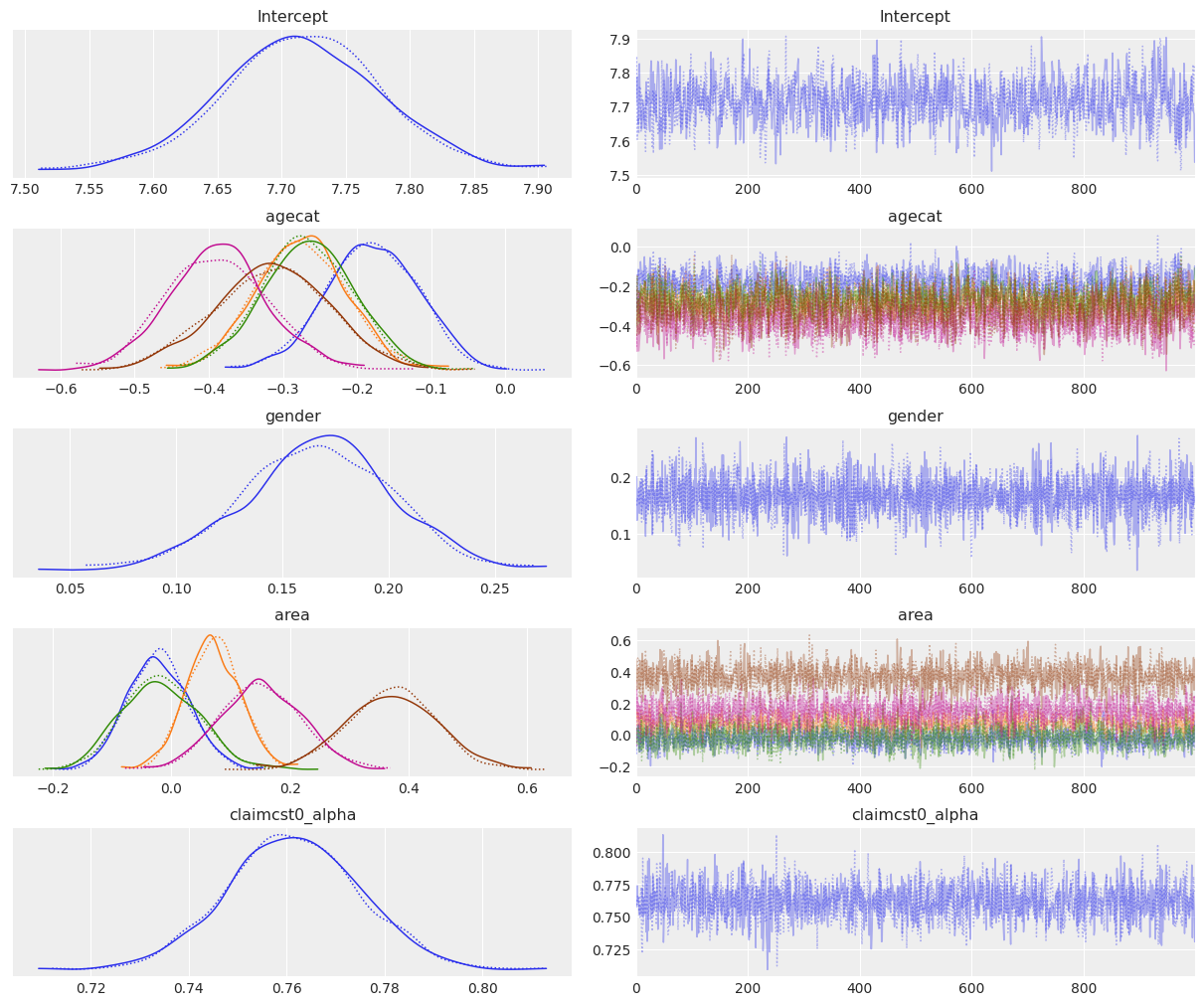

Gamma¶

[10]:

model_gamma = bmb.Model(

"claimcst0 ~ agecat + gender + area",

data,

family="gamma",

link="log",

categorical="agecat",

)

fitted_gamma = model_gamma.fit(tune=2000, target_accept=0.9)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [claimcst0_alpha, Intercept, area, gender, agecat]

Sampling 2 chains for 2_000 tune and 1_000 draw iterations (4_000 + 2_000 draws total) took 88 seconds.

[11]:

az.plot_trace(fitted_gamma);

[12]:

az.summary(fitted_gamma)

[12]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 7.716 | 0.062 | 7.609 | 7.846 | 0.002 | 0.001 | 1028.0 | 1292.0 | 1.0 |

| agecat[2] | -0.180 | 0.062 | -0.295 | -0.064 | 0.002 | 0.001 | 1080.0 | 1486.0 | 1.0 |

| agecat[3] | -0.276 | 0.062 | -0.398 | -0.165 | 0.002 | 0.001 | 1034.0 | 1481.0 | 1.0 |

| agecat[4] | -0.267 | 0.061 | -0.382 | -0.152 | 0.002 | 0.002 | 803.0 | 1458.0 | 1.0 |

| agecat[5] | -0.388 | 0.068 | -0.512 | -0.259 | 0.002 | 0.002 | 1035.0 | 1204.0 | 1.0 |

| agecat[6] | -0.315 | 0.077 | -0.453 | -0.161 | 0.002 | 0.002 | 1197.0 | 1439.0 | 1.0 |

| gender[M] | 0.167 | 0.035 | 0.099 | 0.230 | 0.001 | 0.000 | 3566.0 | 1355.0 | 1.0 |

| area[B] | -0.023 | 0.052 | -0.122 | 0.070 | 0.001 | 0.001 | 1901.0 | 1534.0 | 1.0 |

| area[C] | 0.071 | 0.046 | -0.012 | 0.160 | 0.001 | 0.001 | 1556.0 | 1527.0 | 1.0 |

| area[D] | -0.017 | 0.065 | -0.136 | 0.099 | 0.002 | 0.001 | 1833.0 | 1270.0 | 1.0 |

| area[E] | 0.151 | 0.068 | 0.026 | 0.280 | 0.001 | 0.001 | 2216.0 | 1608.0 | 1.0 |

| area[F] | 0.371 | 0.076 | 0.215 | 0.499 | 0.002 | 0.001 | 2271.0 | 1638.0 | 1.0 |

| claimcst0_alpha | 0.761 | 0.013 | 0.738 | 0.787 | 0.000 | 0.000 | 2514.0 | 1539.0 | 1.0 |

The interpretation of the parameter posteriors is very similar to what we’ve done for the Wald family. The only difference is that some differences, such as the ones for the area posteriors, are a little more exacerbated here.

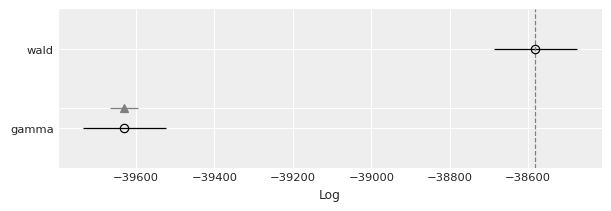

Model comparison¶

We can perform a Bayesian model comparison very easily with az.compare(). Here we pass a dictionary with the InferenceData objects that Model.fit() returned and az.compare() returns a data frame that is ordered from best to worst according to the criteria used.

[13]:

models = {"wald": fitted_wald, "gamma": fitted_gamma}

df_compare = az.compare(models)

df_compare

/home/tomas/anaconda3/envs/bmb/lib/python3.8/site-packages/arviz/stats/stats.py:146: UserWarning: The default method used to estimate the weights for each model,has changed from BB-pseudo-BMA to stacking

warnings.warn(

[13]:

| rank | loo | p_loo | d_loo | weight | se | dse | warning | loo_scale | |

|---|---|---|---|---|---|---|---|---|---|

| wald | 0 | -38581.572882 | 13.038927 | 0.000000 | 1.000000e+00 | 106.058332 | 0.000000 | False | log |

| gamma | 1 | -39629.625926 | 27.483848 | 1048.053044 | 7.438494e-14 | 105.001367 | 35.730604 | False | log |

[14]:

az.plot_compare(df_compare, insample_dev=False);

By default, ArviZ uses loo, which is an estimation of leave one out cross-validation. Another option is the widely applicable information criterion (WAIC). Since the results are in the log scale, the better out-of-sample predictive fit is given by the model with the highest value, which is the Wald model.

[15]:

%load_ext watermark

%watermark -n -u -v -iv -w

Last updated: Tue Jul 27 2021

Python implementation: CPython

Python version : 3.8.5

IPython version : 7.18.1

matplotlib: 3.3.3

arviz : 0.11.2

json : 2.0.9

bambi : 0.5.0

numpy : 1.20.1

Watermark: 2.1.0