T-test¶

[1]:

import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

[2]:

az.style.use("arviz-darkgrid")

np.random.seed(1234)

In this notebook we demo two equivalent ways of performing a two-sample Bayesian t-test to compare the mean value of two Gaussian populations using Bambi.

Generate data¶

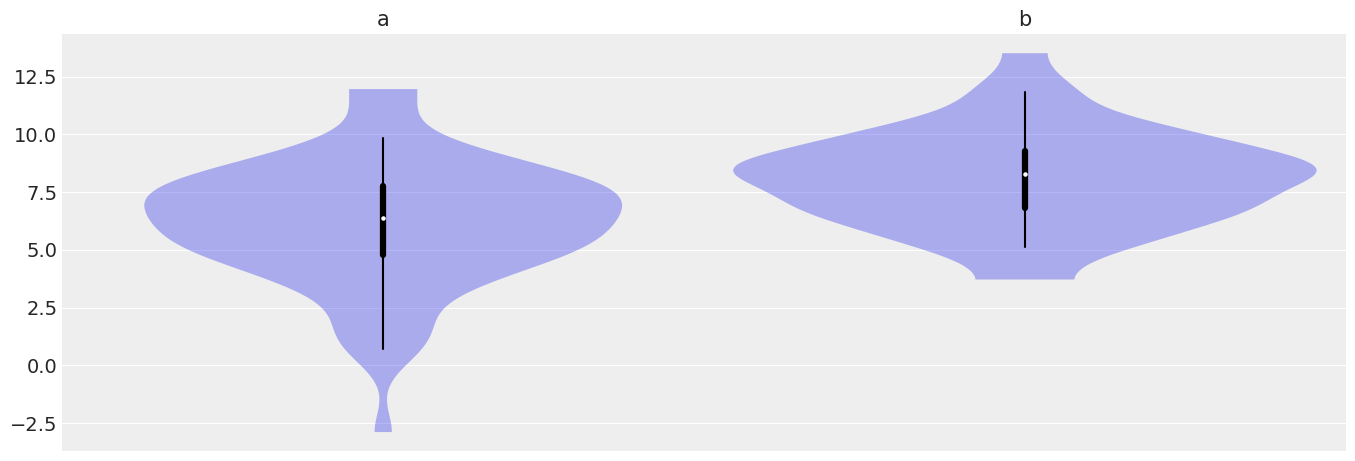

We generate 160 values from a Gaussian with \(\mu=6\) and \(\sigma=2.5\) and another 120 values from a Gaussian’ with \(\mu=8\) and \(\sigma=2\)

[3]:

a = np.random.normal(6, 2.5, 160)

b = np.random.normal(8, 2, 120)

df = pd.DataFrame({"Group": ["a"] * 160 + ["b"] * 120, "Val": np.hstack([a, b])})

[4]:

df.head()

[4]:

| Group | Val | |

|---|---|---|

| 0 | a | 7.178588 |

| 1 | a | 3.022561 |

| 2 | a | 9.581767 |

| 3 | a | 5.218370 |

| 4 | a | 4.198528 |

[5]:

az.plot_violin({"a": a, "b": b});

/home/tomas/anaconda3/envs/bmb/lib/python3.8/site-packages/xarray/core/alignment.py:307: FutureWarning: Index.__or__ operating as a set operation is deprecated, in the future this will be a logical operation matching Series.__or__. Use index.union(other) instead

index = joiner(matching_indexes)

When we carry out a two sample t-test we are implicitly using a linear model that can be specified in different ways. One of these approaches is the following:

Model 1¶

where \(i = 0\) represents the population 1, \(i = 1\) the population 2 and \(\epsilon_i\) is a random error with mean 0. If we replace the indicator variables for the two groups we have

and

if \(\mu_0 = \mu_1\) then

Thus, we can see that testing whether the mean of the two populations are equal is equivalent to testing whether \(\beta_1\) is 0.

Analysis¶

We start by instantiating our model and specifying the model previously described.

[6]:

model_1 = bmb.Model("Val ~ Group", df)

results_1 = model_1.fit()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [Val_sigma, Intercept, Group]

Sampling 2 chains for 1_000 tune and 1_000 draw iterations (2_000 + 2_000 draws total) took 2 seconds.

We’ve only specified the formula for the model and Bambi automatically selected priors distributions and values for their parameters. We can inspect both the setup and the priors as following:

[7]:

model_1

[7]:

Formula: Val ~ Group

Family name: Gaussian

Link: identity

Observations: 280

Priors:

Common-level effects

Intercept ~ Normal(mu: 6.9762, sigma: 8.1247)

Group ~ Normal(mu: 0.0, sigma: 12.4107)

Auxiliary parameters

sigma ~ HalfStudentT(nu: 4, sigma: 2.4567)

------

* To see a plot of the priors call the .plot_priors() method.

* To see a summary or plot of the posterior pass the object returned by .fit() to az.summary() or az.plot_trace()

[8]:

model_1.plot_priors();

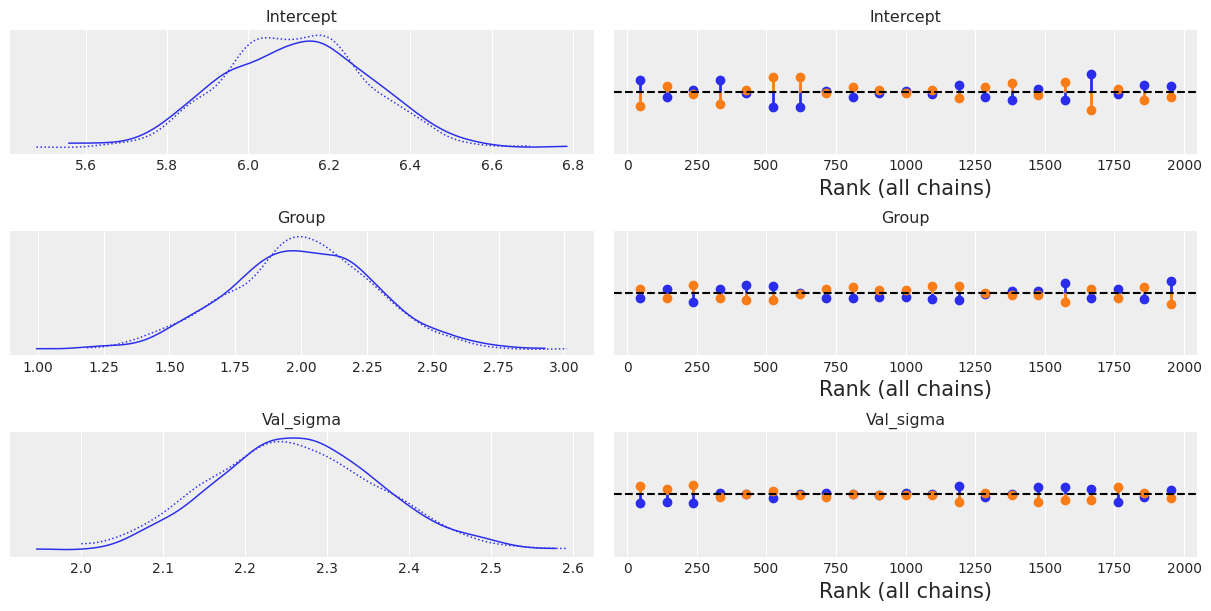

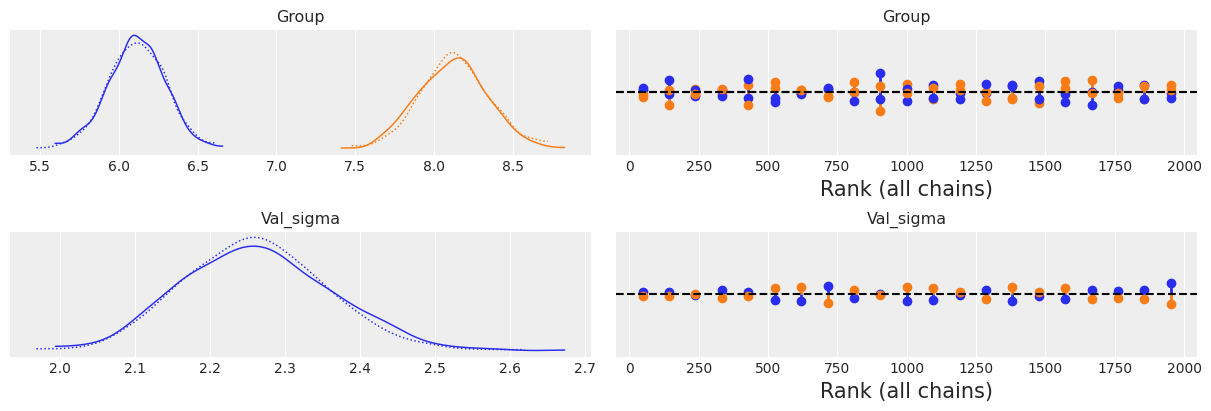

To inspect our posterior and the sampling process we can call az.plot_trace(). The option kind='rank_vlines' gives us a variant of the rank plot that uses lines and dots and helps us to inspect the stationarity of the chains. Since there is no clear pattern or serious deviations from the horizontal lines, we can conclude the chains are stationary.

[9]:

az.plot_trace(results_1, kind="rank_vlines");

[10]:

az.summary(results_1)

[10]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 6.117 | 0.182 | 5.797 | 6.447 | 0.003 | 0.002 | 2873.0 | 1528.0 | 1.0 |

| Group[b] | 2.003 | 0.275 | 1.459 | 2.489 | 0.005 | 0.004 | 2832.0 | 1519.0 | 1.0 |

| Val_sigma | 2.263 | 0.101 | 2.079 | 2.453 | 0.002 | 0.001 | 3176.0 | 1686.0 | 1.0 |

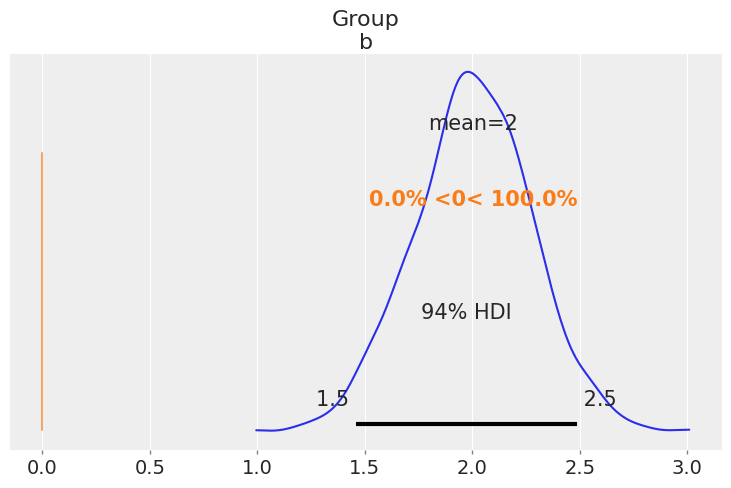

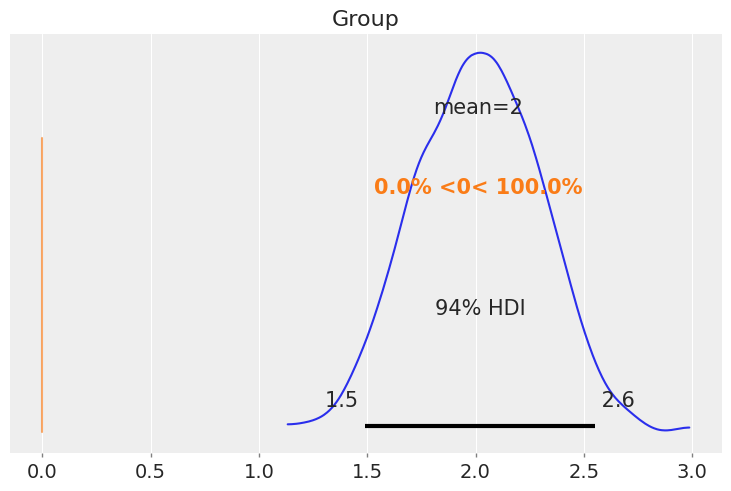

In the summary table we can see the 94% highest density interval for \(\beta_1\) ranges from 1.511 to 2.499. Thus, according to the data and the model used, we conclude the difference between the two population means is somewhere between 1.2 and 2.2 and hence we support the hypotehsis that \(\beta_1 \ne 0\).

Similar conclusions can be made with the density estimate for the posterior distribution of \(\beta_1\). As seen in the table, most of the probability for the difference in the mean roughly ranges from 1.2 to 2.2.

[11]:

# Grab just the posterior of the term of interest (group)

group_posterior = results_1.posterior['Group']

az.plot_posterior(group_posterior, ref_val=0);

Another way to arrive to a similar conclusion is by calculating the probability that the parameter \(\beta_1 > 0\). This probability, practically equal to 1, tells us that the mean of the two populations are different.

[12]:

# Probabiliy that posterior is > 0

(group_posterior.values > 0).mean()

[12]:

1.0

The linear model implicit in the t-test can also be specified without an intercept term, such is the case of Model 2.

Model 2¶

When we carry out a two sample t-test we’re implicitly using the following model:

where \(i = 0\) represents the population 1, \(i = 1\) the population 2 and \(\epsilon\) is a random error with mean 0. If we replace the indicator variables for the two groups we have

and

if \(\mu_0 = \mu_1\) then

Thus, we can see that testing whether the mean of the two populations are equal is equivalent to testing whether \(\beta_0 = \beta_1\).

Analysis¶

We start by instantiating our model and specifying the model previously described. In this model we will bypass the intercept that Bambi adds by default by setting it to zero, even though setting to -1 has the same effect.

[13]:

model_2 = bmb.Model("Val ~ 0 + Group", df)

results_2 = model_2.fit()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [Val_sigma, Group]

Sampling 2 chains for 1_000 tune and 1_000 draw iterations (2_000 + 2_000 draws total) took 2 seconds.

We’ve only specified the formula for the model and Bambi automatically selected priors distributions and values for their parameters. We can inspect both the setup and the priors as following:

[14]:

model_2

[14]:

Formula: Val ~ 0 + Group

Family name: Gaussian

Link: identity

Observations: 280

Priors:

Common-level effects

Group ~ Normal(mu: [0. 0.], sigma: [12.4107 12.4107])

Auxiliary parameters

sigma ~ HalfStudentT(nu: 4, sigma: 2.4567)

------

* To see a plot of the priors call the .plot_priors() method.

* To see a summary or plot of the posterior pass the object returned by .fit() to az.summary() or az.plot_trace()

[15]:

model_2.plot_priors();

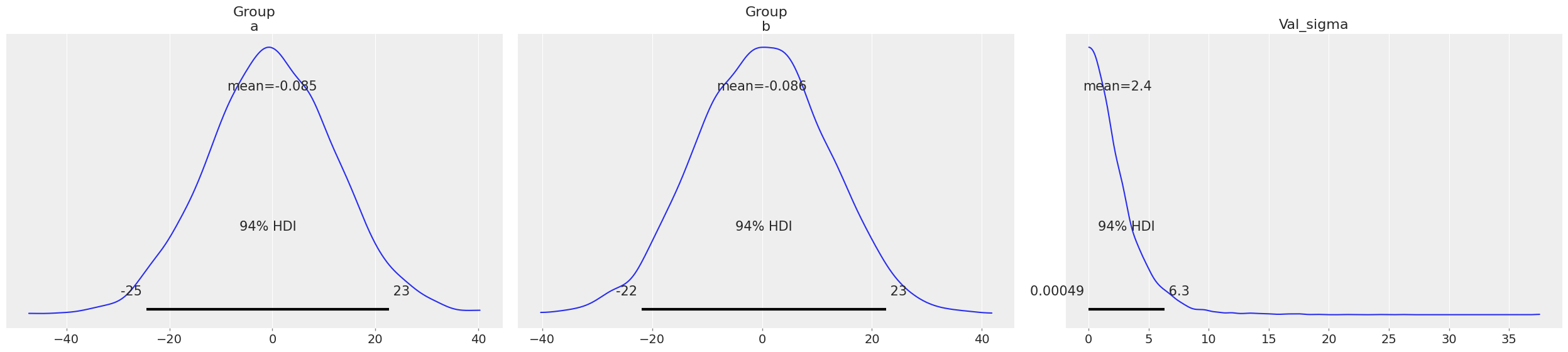

To inspect our posterior and the sampling process we can call az.plot_trace(). The option kind='rank_vlines' gives us a variant of the rank plot that uses lines and dots and helps us to inspect the stationarity of the chains. Since there is no clear pattern or serious deviations from the horizontal lines, we can conclude the chains are stationary.

[16]:

az.plot_trace(results_2, kind="rank_vlines");

[17]:

az.summary(results_2)

[17]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Group[a] | 6.115 | 0.180 | 5.743 | 6.429 | 0.003 | 0.002 | 3028.0 | 1723.0 | 1.0 |

| Group[b] | 8.125 | 0.218 | 7.690 | 8.510 | 0.005 | 0.003 | 2305.0 | 1359.0 | 1.0 |

| Val_sigma | 2.262 | 0.098 | 2.086 | 2.441 | 0.002 | 0.001 | 2922.0 | 1586.0 | 1.0 |

In this summary we can observe the estimated distribution of means for each population. A simple way to compare them is substracting one to the other. In the next plot we can se that the entirety of the distribution of differences is higher than zero and that the mean of population 2 is higher than the mean of population 1 by a mean of 2.

[18]:

# Grab just the posterior of the term of interest (group)

group_posterior = results_2.posterior['Group'][:,:,1] - results_2.posterior['Group'][:,:,0]

az.plot_posterior(group_posterior, ref_val=0);

Another way to arrive to a similar conclusion is by calculating the probability that the parameter \(\beta_1 - \beta_0 > 0\). This probability, practically equal to 1, tells us that the mean of the two populations are different.

[19]:

# Probabiliy that posterior is > 0

(group_posterior.values > 0).mean()

[19]:

1.0

[20]:

%load_ext watermark

%watermark -n -u -v -iv -w

Last updated: Tue Jul 27 2021

Python implementation: CPython

Python version : 3.8.5

IPython version : 7.18.1

bambi : 0.5.0

numpy : 1.20.1

matplotlib: 3.3.3

arviz : 0.11.2

json : 2.0.9

pandas : 1.2.2

Watermark: 2.1.0