Multiple Regression¶

[1]:

import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import statsmodels.api as sm

[2]:

az.style.use("arviz-darkgrid")

SEED = 7355608

Load and examine Eugene-Springfield community sample data¶

Bambi comes with several datasets. These can be accessed via the load_data() function.

[3]:

data = bmb.load_data("ESCS")

np.round(data.describe(), 2)

[3]:

| drugs | n | e | o | a | c | hones | emoti | extra | agree | consc | openn | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 | 604.00 |

| mean | 2.21 | 80.04 | 106.52 | 113.87 | 124.63 | 124.23 | 3.89 | 3.18 | 3.21 | 3.13 | 3.57 | 3.41 |

| std | 0.65 | 23.21 | 19.88 | 21.12 | 16.67 | 18.69 | 0.45 | 0.46 | 0.53 | 0.47 | 0.44 | 0.52 |

| min | 1.00 | 23.00 | 42.00 | 51.00 | 63.00 | 44.00 | 2.56 | 1.47 | 1.62 | 1.59 | 2.00 | 1.28 |

| 25% | 1.71 | 65.75 | 93.00 | 101.00 | 115.00 | 113.00 | 3.59 | 2.88 | 2.84 | 2.84 | 3.31 | 3.06 |

| 50% | 2.14 | 76.00 | 107.00 | 112.00 | 126.00 | 125.00 | 3.88 | 3.19 | 3.22 | 3.16 | 3.56 | 3.44 |

| 75% | 2.64 | 93.00 | 120.00 | 129.00 | 136.00 | 136.00 | 4.20 | 3.47 | 3.56 | 3.44 | 3.84 | 3.75 |

| max | 4.29 | 163.00 | 158.00 | 174.00 | 171.00 | 180.00 | 4.94 | 4.62 | 4.75 | 4.44 | 4.75 | 4.72 |

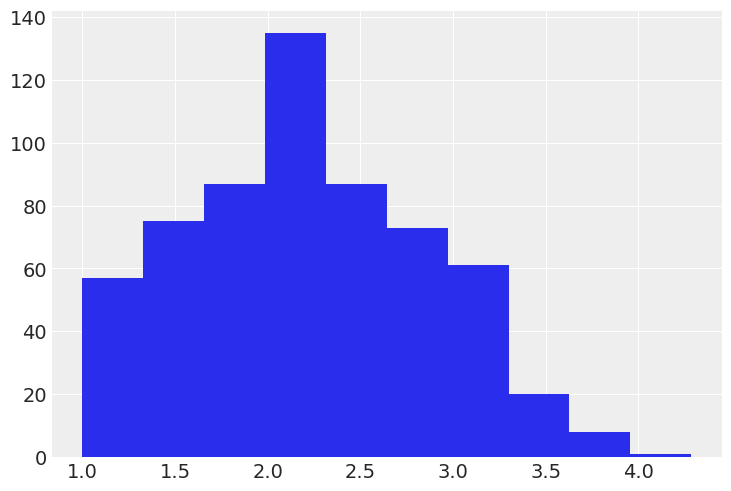

It’s always a good idea to start off with some basic plotting. Here’s what our outcome variable drugs (some index of self-reported illegal drug use) looks like:

[4]:

data["drugs"].hist();

The five numerical predictors that we’ll use are sum-scores measuring participants’ standings on the Big Five personality dimensions. The dimensions are:

O = Openness to experience

C = Conscientiousness

E = Extraversion

A = Agreeableness

N = Neuroticism

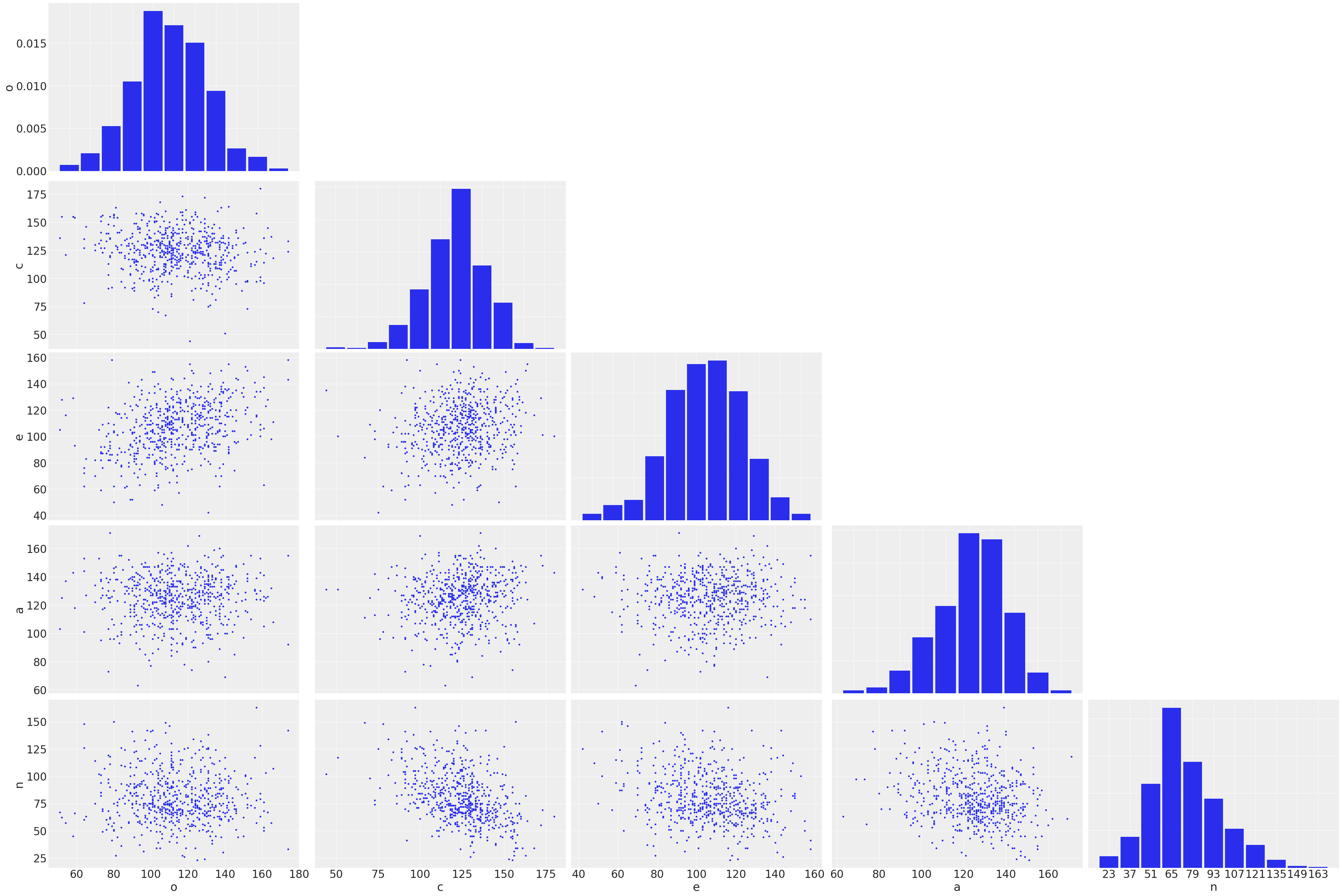

Here’s what our predictors look like:

[5]:

az.plot_pair(data[["o", "c", "e", "a", "n"]].to_dict("list"), marginals=True, textsize=24);

We can easily see all the predictors are more or less symmetrically distributed without outliers and the pairwise correlations between them are not strong.

Specify model and examine priors¶

We’re going to fit a pretty straightforward additive multiple regression model predicting drug index from all 5 personality dimension scores. It’s simple to specify the model using a familiar formula interface. Here we also tell Bambi to run two parallel Markov Chain Monte Carlo (MCMC) chains, each one with 2000 draws. The first 1000 draws are tuning steps that we discard and the last 1000 draws are considered to be taken from the joint posterior distribution of all the parameters (to be confirmed when we analyze the convergence of the chains).

[6]:

model = bmb.Model("drugs ~ o + c + e + a + n", data)

fitted = model.fit(tune=2000, draws=2000, init="adapt_diag", random_seed=SEED)

Auto-assigning NUTS sampler...

Initializing NUTS using adapt_diag...

Multiprocess sampling (2 chains in 2 jobs)

NUTS: [drugs_sigma, n, a, e, c, o, Intercept]

Sampling 2 chains for 2_000 tune and 2_000 draw iterations (4_000 + 4_000 draws total) took 6 seconds.

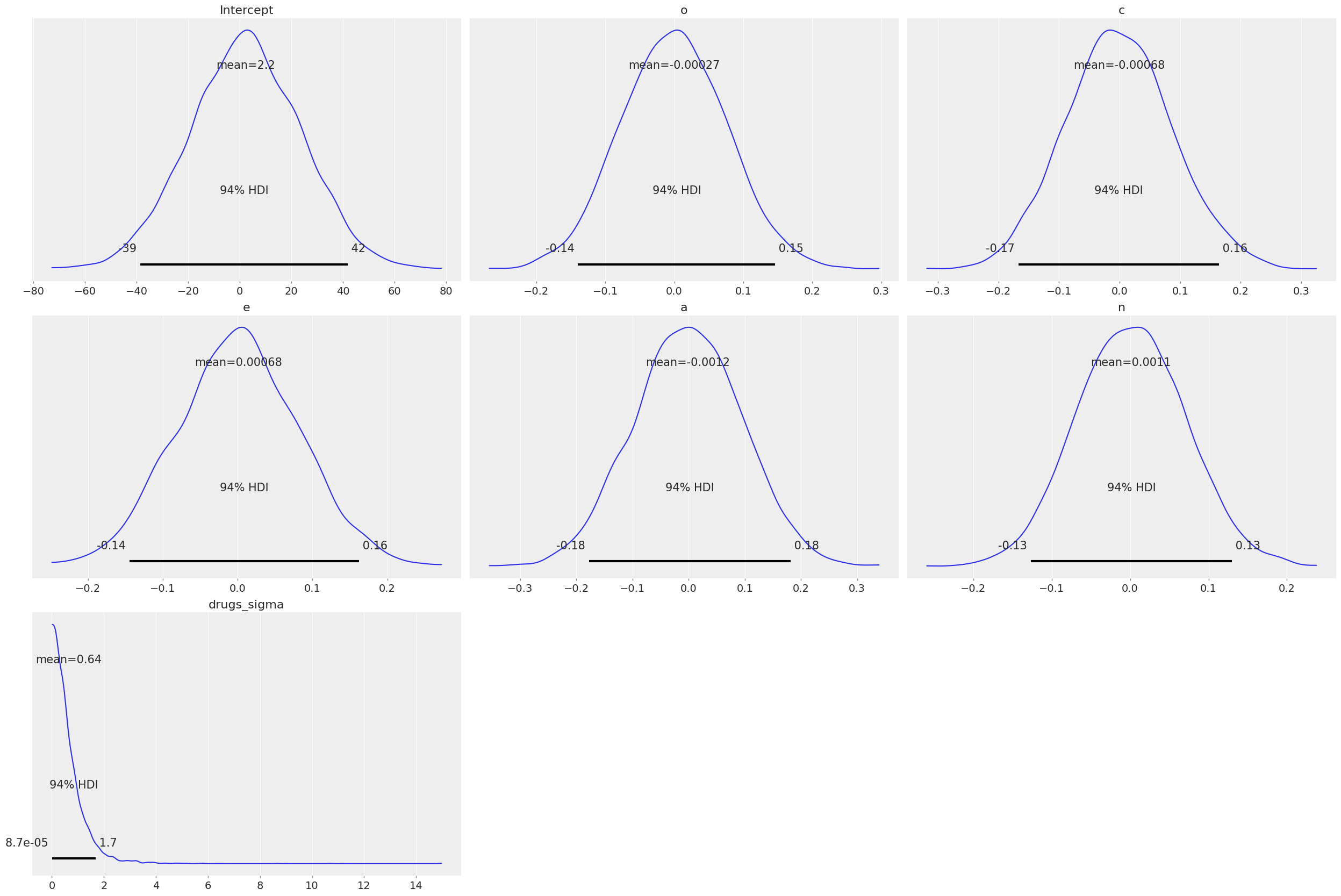

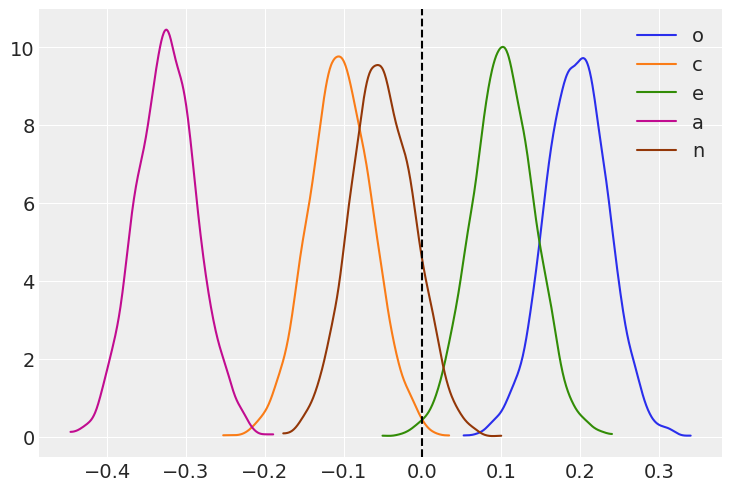

Great! But this is a Bayesian model, right? What about the priors? If no priors are given explicitly by the user, then Bambi chooses smart default priors for all parameters of the model based on the implied partial correlations between the outcome and the predictors. Here’s what the default priors look like in this case – the plots below show 1000 draws from each prior distribution:

[7]:

model.plot_priors();

[8]:

# Normal priors on the coefficients

{x.name:x.prior.args for x in model.terms.values()}

[8]:

{'Intercept': {'mu': array(2.21014664), 'sigma': array(21.19375074)},

'o': {'mu': array(0.), 'sigma': array(0.0768135)},

'c': {'mu': array(0.), 'sigma': array(0.08679683)},

'e': {'mu': array(0.), 'sigma': array(0.0815892)},

'a': {'mu': array(0.), 'sigma': array(0.09727366)},

'n': {'mu': array(0.), 'sigma': array(0.06987412)}}

[9]:

# HalfStudentT prior on the residual standard deviation

model.response.family.likelihood.priors

[9]:

{'sigma': HalfStudentT(nu: 4, sigma: 0.6482)}

You could also just print the model and see it also contains the same information about the priors

[10]:

model

[10]:

Formula: drugs ~ o + c + e + a + n

Family name: Gaussian

Link: identity

Observations: 604

Priors:

Common-level effects

Intercept ~ Normal(mu: 2.2101, sigma: 21.1938)

o ~ Normal(mu: 0.0, sigma: 0.0768)

c ~ Normal(mu: 0.0, sigma: 0.0868)

e ~ Normal(mu: 0.0, sigma: 0.0816)

a ~ Normal(mu: 0.0, sigma: 0.0973)

n ~ Normal(mu: 0.0, sigma: 0.0699)

Auxiliary parameters

sigma ~ HalfStudentT(nu: 4, sigma: 0.6482)

------

* To see a plot of the priors call the .plot_priors() method.

* To see a summary or plot of the posterior pass the object returned by .fit() to az.summary() or az.plot_trace()

Some more info about the default prior distributions can be found in this technical paper.

Notice the apparently small SDs of the slope priors. This is due to the relative scales of the outcome and the predictors: remember from the plots above that the outcome, drugs, ranges from 1 to about 4, while the predictors all range from about 20 to 180 or so. A one-unit change in any of the predictors – which is a trivial increase on the scale of the predictors – is likely to lead to a very small absolute change in the outcome. Believe it or not, these priors are actually quite wide on

the partial correlation scale!

Examine the model results¶

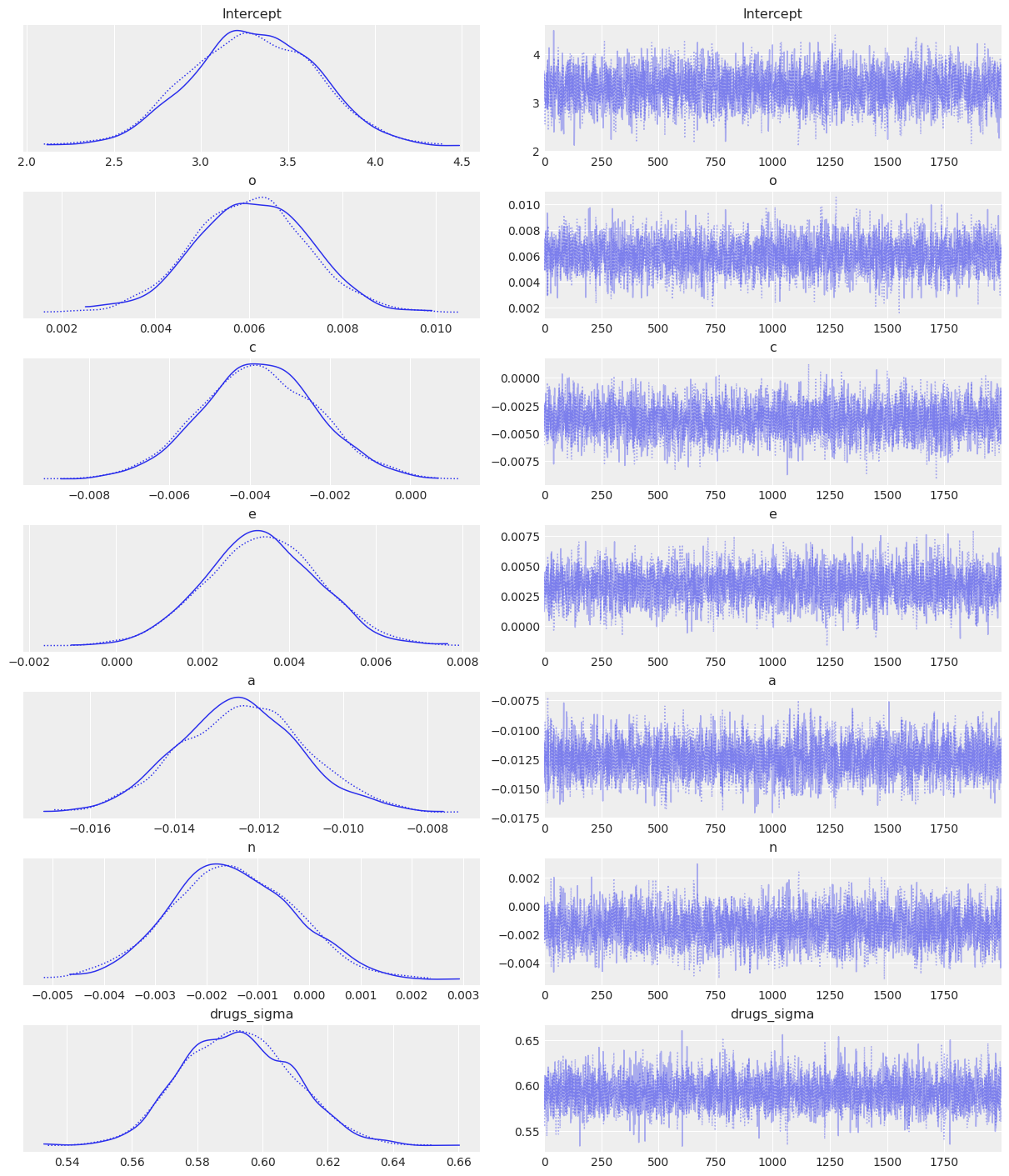

Let’s start with a pretty picture of the parameter estimates!

[11]:

az.plot_trace(fitted);

The left panels show the marginal posterior distributions for all of the model’s parameters, which summarize the most plausible values of the regression coefficients, given the data we have now observed. These posterior density plots show two overlaid distributions because we ran two MCMC chains. The panels on the right are “trace plots” showing the sampling paths of the two MCMC chains as they wander through the parameter space. If any of these paths exhibited a pattern other than white noise we would be concerned about the convergence of the chains.

A much more succinct (non-graphical) summary of the parameter estimates can be found like so:

[12]:

az.summary(fitted)

[12]:

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 3.300 | 0.359 | 2.642 | 3.963 | 0.006 | 0.004 | 3907.0 | 2885.0 | 1.0 |

| o | 0.006 | 0.001 | 0.004 | 0.008 | 0.000 | 0.000 | 4226.0 | 3071.0 | 1.0 |

| c | -0.004 | 0.001 | -0.007 | -0.001 | 0.000 | 0.000 | 4131.0 | 3767.0 | 1.0 |

| e | 0.003 | 0.001 | 0.001 | 0.006 | 0.000 | 0.000 | 4228.0 | 3410.0 | 1.0 |

| a | -0.012 | 0.001 | -0.015 | -0.010 | 0.000 | 0.000 | 4774.0 | 3077.0 | 1.0 |

| n | -0.001 | 0.001 | -0.004 | 0.001 | 0.000 | 0.000 | 3731.0 | 3223.0 | 1.0 |

| drugs_sigma | 0.593 | 0.017 | 0.563 | 0.626 | 0.000 | 0.000 | 5357.0 | 3088.0 | 1.0 |

When there are multiple MCMC chains, the default summary output includes some basic convergence diagnostic info (the effective MCMC sample sizes and the Gelman-Rubin “R-hat” statistics), although in this case it’s pretty clear from the trace plots above that the chains have converged just fine.

Summarize effects on partial correlation scale¶

[13]:

samples = fitted.posterior

It turns out that we can convert each regression coefficient into a partial correlation by multiplying it by a constant that depends on (1) the SD of the predictor, (2) the SD of the outcome, and (3) the degree of multicollinearity with the set of other predictors. Two of these statistics are actually already computed and stored in the fitted model object, in a dictionary called dm_statistics (for design matrix statistics), because they are used internally. We will compute the others

manually.

Some information about the relationship between linear regression parameters and partial correlation can be found here.

[14]:

# the names of the predictors

varnames = ['o', 'c', 'e', 'a', 'n']

# compute the needed statistics like R-squared when each predictor is response and all the

# other predictors are the predictor

# x_matrix = common effects design matrix (excluding intercept/constant term)

terms = [t for t in model.common_terms.values() if t.name != "Intercept"]

x_matrix = [pd.DataFrame(x.data, columns=x.levels) for x in terms]

x_matrix = pd.concat(x_matrix, axis=1)

dm_statistics = {

'r2_x': pd.Series(

{

x: sm.OLS(

endog=x_matrix[x],

exog=sm.add_constant(x_matrix.drop(x, axis=1))

if "Intercept" in model.term_names

else x_matrix.drop(x, axis=1),

)

.fit()

.rsquared

for x in list(x_matrix.columns)

}

),

'sigma_x': x_matrix.std(),

'mean_x': x_matrix.mean(axis=0),

}

r2_x = dm_statistics['r2_x']

sd_x = dm_statistics['sigma_x']

r2_y = pd.Series([sm.OLS(endog=data['drugs'],

exog=sm.add_constant(data[[p for p in varnames if p != x]])).fit().rsquared

for x in varnames], index=varnames)

sd_y = data['drugs'].std()

# compute the products to multiply each slope with to produce the partial correlations

slope_constant = (sd_x[varnames] / sd_y) * ((1 - r2_x[varnames]) / (1 - r2_y)) ** 0.5

slope_constant

/home/tomas/anaconda3/envs/bmb/lib/python3.8/site-packages/statsmodels/tsa/tsatools.py:142: FutureWarning: In a future version of pandas all arguments of concat except for the argument 'objs' will be keyword-only

x = pd.concat(x[::order], 1)

[14]:

o 32.392557

c 27.674284

e 30.305117

a 26.113299

n 34.130431

dtype: float64

Now we just multiply each sampled regression coefficient by its corresponding slope_constant to transform it into a sample partial correlation coefficient.

[15]:

# We also stack draws and chains into samples.

# This will be useful for the following plots

pcorr_samples = (samples[varnames] * slope_constant).stack(samples=("draw", "chain"))

And voilà! We now have a joint posterior distribution for the partial correlation coefficients. Let’s plot the marginal posterior distributions:

[16]:

# Pass the same axes to az.plot_kde to have all the densities in the same plot

_, ax = plt.subplots()

for idx, (k, v) in enumerate(pcorr_samples.items()):

az.plot_kde(v.values, label=k, plot_kwargs={'color':f'C{idx}'}, ax=ax)

ax.axvline(x=0, color='k', linestyle='--');

The means of these distributions serve as good point estimates of the partial correlations:

[17]:

pcorr_samples.mean()

[17]:

<xarray.Dataset>

Dimensions: ()

Data variables:

o float64 0.1956

c float64 -0.1043

e float64 0.1021

a float64 -0.3245

n float64 -0.05098- o()float640.1956

array(0.19563406)

- c()float64-0.1043

array(-0.10433507)

- e()float640.1021

array(0.10208719)

- a()float64-0.3245

array(-0.32446776)

- n()float64-0.05098

array(-0.05098468)

Relative importance: Which predictors have the strongest effects (defined in terms of squared partial correlation?¶

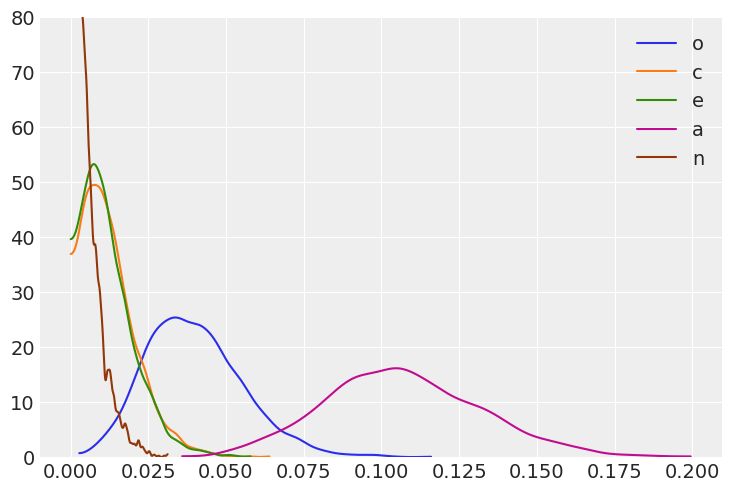

We just take the square of the partial correlation coefficients, so it’s easy to get posteriors on that scale too:

[18]:

_, ax = plt.subplots()

for idx, (k, v) in enumerate(pcorr_samples.items()):

az.plot_kde(v.values ** 2, label=k, plot_kwargs={'color':f'C{idx}'}, ax=ax)

ax.set_ylim(0, 80);

With these posteriors we can ask: What is the probability that the squared partial correlation for Openness (blue) is greater than the squared partial correlation for Conscientiousness (orange)?

[19]:

(pcorr_samples['o'] ** 2 > pcorr_samples['c'] ** 2).mean()

[19]:

<xarray.DataArray ()> array(0.93075)

- 0.9307

array(0.93075)

If we contrast this result with the plot we’ve just shown, we may think the probability is too high when looking at the overlap between the blue and orange curves. However, the previous plot is only showing marginal posteriors, which don’t account for correlations between the coefficients. In our Bayesian world, our model parameters’ are random variables (and consequently, any combination of them are too). As such, squared partial correlation have a joint distribution. When computing probabilities involving at least two of these parameters, one has to use the joint distribution. Otherwise, if we choose to work only with marginals, we are implicitly assuming independence.

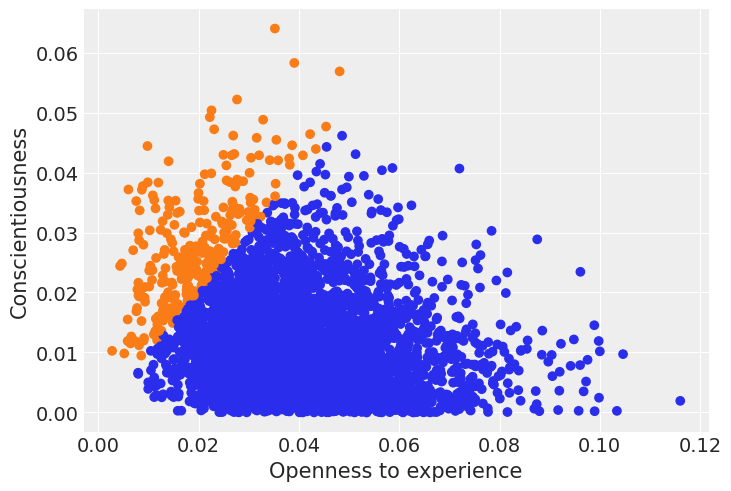

Let’s check the joint distribution of the squared partial correlation for Openness and Conscientiousness. We highlight with a blue color the draws where the coefficient for Openness is greater than the coefficient for Conscientiousness.

[20]:

sq_partial_c = pcorr_samples['c'].values ** 2

sq_partial_o = pcorr_samples['o'].values ** 2

[21]:

colors = list(np.where(sq_partial_c > sq_partial_o, "C1", "C0"))

plt.scatter(sq_partial_o, sq_partial_c, c=colors)

plt.xlabel("Openness to experience")

plt.ylabel("Conscientiousness");

We can see that in the great majority of the draws (92.8%) the squared partial correlation for Openness is greater than the one for Conscientiousness. In fact, we can check the correlation between them is

[22]:

np.corrcoef(sq_partial_c, sq_partial_o)[0, 1]

[22]:

-0.20926927747820298

which explains why ony looking at the marginal posteriors (i.e. assuming independence) is not the best approach here.

For each predictor, what is the probability that it has the largest squared partial correlation?

[23]:

pc_df = pcorr_samples.to_dataframe()

(pc_df**2).idxmax(axis=1).value_counts() / len(pc_df.index)

[23]:

a 0.991

o 0.009

dtype: float64

Agreeableness is clearly the strongest predictor of drug use among the Big Five personality traits in terms of partial correlation, but it’s still not a particularly strong predictor in an absolute sense. Walter Mischel famously claimed that it is rare to see correlations between personality measure and relevant behavioral outcomes exceed 0.3. In this case, the probability that the agreeableness partial correlation exceeds 0.3 is:

[24]:

(np.abs(pcorr_samples['a']) > 0.3).mean()

[24]:

<xarray.DataArray 'a' ()> array(0.7405)

- 0.7405

array(0.7405)

Posterior Predictive¶

Once we have computed the posterior distribution, we can use it to compute the posterior predictive distribution. As the name implies, these are predictions assuming the model’s parameter are distributed as the posterior. Thus, the posterior predictive includes the uncertainty about the parameters.

With bambi we can use the model’s predict() method with the fitted az.InferenceData to generate a posterior predictive samples, which are then automatically added to the az.InferenceData object

[25]:

posterior_predictive = model.predict(fitted, kind="pps", draws=500)

fitted

[25]:

-

- chain: 2

- draw: 2000

- chain(chain)int640 1

array([0, 1])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- Intercept(chain, draw)float643.651 3.047 3.137 ... 2.858 3.594

array([[3.65069046, 3.04651629, 3.13740761, ..., 2.6821306 , 3.04115523, 2.94252639], [3.58804847, 3.27668891, 3.48851453, ..., 2.90893162, 2.85824144, 3.59360925]]) - o(chain, draw)float640.005363 0.007304 ... 0.006907

array([[0.00536315, 0.00730356, 0.00527168, ..., 0.00670975, 0.00586063, 0.00634339], [0.00575748, 0.00548672, 0.0048085 , ..., 0.00612319, 0.00583453, 0.00690697]]) - c(chain, draw)float64-0.004283 -0.002374 ... -0.004987

array([[-0.00428333, -0.00237386, -0.00403137, ..., -0.00093307, -0.00268035, -0.00277182], [-0.0060793 , -0.00253533, -0.00432252, ..., -0.00285517, -0.00113644, -0.00498682]]) - e(chain, draw)float640.003819 0.001203 ... 0.002943

array([[0.00381886, 0.00120326, 0.00446694, ..., 0.00576928, 0.00313625, 0.00337149], [0.00241546, 0.00406937, 0.00381798, ..., 0.00501358, 0.00352162, 0.00294272]]) - a(chain, draw)float64-0.01406 -0.01225 ... -0.01402

array([[-0.01405812, -0.01225138, -0.01110973, ..., -0.01455337, -0.01183311, -0.01072709], [-0.01089082, -0.01449155, -0.01223059, ..., -0.01296299, -0.01165226, -0.01402432]]) - n(chain, draw)float64-0.002406 0.000624 ... -0.001155

array([[-2.40639073e-03, 6.23965391e-04, -1.83796792e-03, ..., 1.21010564e-03, 1.29345868e-04, -1.69506666e-03], [-2.16961729e-03, -4.40994219e-05, -2.59251198e-03, ..., 4.36821955e-04, -1.02443495e-03, -1.15491559e-03]]) - drugs_sigma(chain, draw)float640.6013 0.5739 ... 0.5752 0.5647

array([[0.60134191, 0.57393202, 0.61056613, ..., 0.61989686, 0.58687842, 0.59353815], [0.57839709, 0.59314996, 0.55427934, ..., 0.55985796, 0.57523644, 0.56468057]])

- created_at :

- 2021-11-14T13:33:08.046115

- arviz_version :

- 0.11.4

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- sampling_time :

- 6.432605028152466

- tuning_steps :

- 2000

- modeling_interface :

- bambi

- modeling_interface_version :

- 0.6.3

<xarray.Dataset> Dimensions: (chain: 2, draw: 2000) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 6 ... 1994 1995 1996 1997 1998 1999 Data variables: Intercept (chain, draw) float64 3.651 3.047 3.137 ... 2.909 2.858 3.594 o (chain, draw) float64 0.005363 0.007304 ... 0.005835 0.006907 c (chain, draw) float64 -0.004283 -0.002374 ... -0.004987 e (chain, draw) float64 0.003819 0.001203 ... 0.003522 0.002943 a (chain, draw) float64 -0.01406 -0.01225 ... -0.01165 -0.01402 n (chain, draw) float64 -0.002406 0.000624 ... -0.001155 drugs_sigma (chain, draw) float64 0.6013 0.5739 0.6106 ... 0.5752 0.5647 Attributes: created_at: 2021-11-14T13:33:08.046115 arviz_version: 0.11.4 inference_library: pymc3 inference_library_version: 3.11.2 sampling_time: 6.432605028152466 tuning_steps: 2000 modeling_interface: bambi modeling_interface_version: 0.6.3xarray.Dataset -

- chain: 2

- draw: 2000

- drugs_dim_0: 604

- chain(chain)int640 1

array([0, 1])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- drugs_dim_0(drugs_dim_0)int640 1 2 3 4 5 ... 599 600 601 602 603

array([ 0, 1, 2, ..., 601, 602, 603])

- drugs(chain, draw, drugs_dim_0)float64-0.8408 -1.814 ... -0.3551 -2.477

array([[[-0.84075734, -1.81362014, -0.4255322 , ..., -0.69251294, -0.4248156 , -2.63512699], [-1.01683296, -1.49313827, -0.4746001 , ..., -0.90000463, -0.40516097, -2.323054 ], [-0.79889412, -1.73695085, -0.46227906, ..., -0.75376984, -0.42622473, -2.5612381 ], ..., [-0.93598668, -1.69772588, -0.51987318, ..., -0.93510707, -0.4471789 , -2.27580548], [-0.97077085, -1.59090954, -0.48879826, ..., -0.94635546, -0.40224909, -2.27919476], [-0.78779123, -1.55355615, -0.50052221, ..., -0.73683744, -0.39767725, -2.64196996]], [[-0.96907181, -1.63777475, -0.39330561, ..., -0.77898206, -0.37408209, -2.46202048], [-0.91968295, -1.83994494, -0.43858056, ..., -0.85357087, -0.44669301, -2.42751692], [-0.75697919, -1.99097606, -0.35572117, ..., -0.65524935, -0.3417004 , -3.01708411], ..., [-0.94507375, -1.91280381, -0.38938738, ..., -0.90801231, -0.34969109, -2.58135242], [-0.76069289, -1.62471374, -0.53383762, ..., -0.7733836 , -0.36988817, -2.72400415], [-1.08865427, -1.69682816, -0.36624593, ..., -0.80436288, -0.35509969, -2.47704965]]])

- created_at :

- 2021-11-14T13:33:08.545740

- arviz_version :

- 0.11.4

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- modeling_interface :

- bambi

- modeling_interface_version :

- 0.6.3

<xarray.Dataset> Dimensions: (chain: 2, draw: 2000, drugs_dim_0: 604) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 6 ... 1994 1995 1996 1997 1998 1999 * drugs_dim_0 (drugs_dim_0) int64 0 1 2 3 4 5 6 ... 598 599 600 601 602 603 Data variables: drugs (chain, draw, drugs_dim_0) float64 -0.8408 -1.814 ... -2.477 Attributes: created_at: 2021-11-14T13:33:08.545740 arviz_version: 0.11.4 inference_library: pymc3 inference_library_version: 3.11.2 modeling_interface: bambi modeling_interface_version: 0.6.3xarray.Dataset -

- chain: 2

- draw: 2000

- chain(chain)int640 1

array([0, 1])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- tree_depth(chain, draw)int642 3 3 3 3 3 3 2 ... 3 2 3 3 2 2 2 2

array([[2, 3, 3, ..., 3, 2, 2], [2, 3, 2, ..., 2, 2, 2]]) - step_size(chain, draw)float641.275 1.275 1.275 ... 0.9102 0.9102

array([[1.27477719, 1.27477719, 1.27477719, ..., 1.27477719, 1.27477719, 1.27477719], [0.91024308, 0.91024308, 0.91024308, ..., 0.91024308, 0.91024308, 0.91024308]]) - process_time_diff(chain, draw)float640.0005706 0.001164 ... 0.0005448

array([[0.00057061, 0.00116436, 0.00110361, ..., 0.00204352, 0.00110408, 0.00106472], [0.00079885, 0.00113047, 0.00061269, ..., 0.00054601, 0.00054556, 0.0005448 ]]) - perf_counter_start(chain, draw)float643.36e+03 3.36e+03 ... 3.362e+03

array([[3359.5572271 , 3359.55795158, 3359.55984497, ..., 3362.00747461, 3362.0097799 , 3362.01112689], [3359.87529071, 3359.87631284, 3359.87760176, ..., 3362.39692836, 3362.3975897 , 3362.39825002]]) - n_steps(chain, draw)float643.0 7.0 7.0 7.0 ... 3.0 3.0 3.0 3.0

array([[3., 7., 7., ..., 7., 3., 3.], [3., 7., 3., ..., 3., 3., 3.]]) - max_energy_error(chain, draw)float64-0.3405 2.412 ... 0.4622 1.18

array([[-0.34046494, 2.41178052, -0.53982407, ..., 1.25225258, -0.94252774, 0.15639886], [ 1.12627399, -0.28310319, 0.65654009, ..., 2.68792243, 0.46217539, 1.18000996]]) - energy_error(chain, draw)float64-0.3405 0.54 ... 0.08953 0.3252

array([[-0.34046494, 0.53997896, -0.50521833, ..., 0.87400353, -0.94252774, -0.03983713], [ 0.01225537, -0.06638949, 0.33027893, ..., -0.12393059, 0.08953243, 0.32524901]]) - diverging(chain, draw)boolFalse False False ... False False

array([[False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False]]) - energy(chain, draw)float64537.9 542.9 539.8 ... 539.6 540.5

array([[537.88380442, 542.91870953, 539.7732212 , ..., 545.92067292, 542.01606857, 536.98203815], [541.50062644, 537.32069661, 541.0216367 , ..., 542.31887228, 539.56329062, 540.46028379]]) - acceptance_rate(chain, draw)float641.0 0.4657 1.0 ... 0.8814 0.5791

array([[1. , 0.46568957, 1. , ..., 0.62514956, 1. , 0.95926919], [0.77363549, 1. , 0.65618248, ..., 0.8987156 , 0.88143915, 0.5791004 ]]) - perf_counter_diff(chain, draw)float640.0005708 0.001732 ... 0.000545

array([[0.00057076, 0.00173208, 0.00110338, ..., 0.00204351, 0.00110386, 0.00106389], [0.00080032, 0.00113001, 0.00061163, ..., 0.00054558, 0.00054565, 0.00054497]]) - lp(chain, draw)float64-535.2 -538.4 ... -536.4 -537.3

array([[-535.17187142, -538.39004876, -536.06783458, ..., -541.49775922, -535.90611616, -534.97878508], [-536.18761527, -536.03919088, -538.32842318, ..., -537.59797345, -536.40397628, -537.30435609]]) - step_size_bar(chain, draw)float640.9295 0.9295 ... 0.8622 0.8622

array([[0.92945919, 0.92945919, 0.92945919, ..., 0.92945919, 0.92945919, 0.92945919], [0.86224237, 0.86224237, 0.86224237, ..., 0.86224237, 0.86224237, 0.86224237]])

- created_at :

- 2021-11-14T13:33:08.053283

- arviz_version :

- 0.11.4

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- sampling_time :

- 6.432605028152466

- tuning_steps :

- 2000

- modeling_interface :

- bambi

- modeling_interface_version :

- 0.6.3

<xarray.Dataset> Dimensions: (chain: 2, draw: 2000) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 ... 1995 1996 1997 1998 1999 Data variables: (12/13) tree_depth (chain, draw) int64 2 3 3 3 3 3 3 2 ... 3 2 3 3 2 2 2 2 step_size (chain, draw) float64 1.275 1.275 ... 0.9102 0.9102 process_time_diff (chain, draw) float64 0.0005706 0.001164 ... 0.0005448 perf_counter_start (chain, draw) float64 3.36e+03 3.36e+03 ... 3.362e+03 n_steps (chain, draw) float64 3.0 7.0 7.0 7.0 ... 3.0 3.0 3.0 max_energy_error (chain, draw) float64 -0.3405 2.412 ... 0.4622 1.18 ... ... diverging (chain, draw) bool False False False ... False False energy (chain, draw) float64 537.9 542.9 539.8 ... 539.6 540.5 acceptance_rate (chain, draw) float64 1.0 0.4657 1.0 ... 0.8814 0.5791 perf_counter_diff (chain, draw) float64 0.0005708 0.001732 ... 0.000545 lp (chain, draw) float64 -535.2 -538.4 ... -536.4 -537.3 step_size_bar (chain, draw) float64 0.9295 0.9295 ... 0.8622 0.8622 Attributes: created_at: 2021-11-14T13:33:08.053283 arviz_version: 0.11.4 inference_library: pymc3 inference_library_version: 3.11.2 sampling_time: 6.432605028152466 tuning_steps: 2000 modeling_interface: bambi modeling_interface_version: 0.6.3xarray.Dataset -

- drugs_dim_0: 604

- drugs_dim_0(drugs_dim_0)int640 1 2 3 4 5 ... 599 600 601 602 603

array([ 0, 1, 2, ..., 601, 602, 603])

- drugs(drugs_dim_0)float641.857 3.071 1.571 ... 1.5 2.5 3.357

array([1.85714286, 3.07142857, 1.57142857, 2.21428571, 1.07142857, 1.42857143, 1.14285714, 2.14285714, 2.14285714, 1.07142857, 1.85714286, 2.5 , 1.85714286, 2.71428571, 1.42857143, 1.71428571, 1.71428571, 3.14285714, 2.71428571, 1.92857143, 2.71428571, 2.28571429, 2.35714286, 1.71428571, 2. , 2.92857143, 2.5 , 2.92857143, 2.64285714, 2.21428571, 2.78571429, 2.71428571, 3.07142857, 2. , 3. , 1.92857143, 3.07142857, 2.57142857, 2.71428571, 3.07142857, 1.78571429, 1.78571429, 3.57142857, 2.28571429, 2.78571429, 2.14285714, 2.71428571, 2.71428571, 2.35714286, 2.28571429, 1.85714286, 2.57142857, 2.14285714, 3.07142857, 2.07142857, 3.5 , 1.71428571, 2.5 , 2.14285714, 1.14285714, 3.5 , 1.85714286, 3.28571429, 2.64285714, 2. , 1.85714286, 2.35714286, 2.21428571, 3.14285714, 2.64285714, 1.28571429, 1.64285714, 2.64285714, 2.07142857, 2.21428571, 3.07142857, 2.42857143, 3.21428571, 2.71428571, 2.07142857, 2.42857143, 2.07142857, 2.92857143, 3.42857143, 1.92857143, 2.57142857, 1. , 2.42857143, 2.14285714, 1.71428571, 1.78571429, 3.35714286, 1.71428571, 1.85714286, 2.07142857, 2.71428571, 1.5 , 1.57142857, 1.14285714, 1. , ... 1.35714286, 3.07142857, 1.42857143, 2.64285714, 1.35714286, 2.07142857, 3. , 1.35714286, 1.85714286, 1.42857143, 1.78571429, 2. , 2.42857143, 1.42857143, 2. , 3.07142857, 1.5 , 2. , 2.42857143, 2. , 2.64285714, 3.92857143, 2.42857143, 2. , 1.71428571, 1.42857143, 2. , 1.78571429, 1.85714286, 2.78571429, 1.14285714, 1.42857143, 2.21428571, 2.07142857, 1.42857143, 1.85714286, 2.64285714, 3.5 , 2. , 2. , 2.92857143, 1.71428571, 2.57142857, 2.28571429, 1.21428571, 2.64285714, 1.21428571, 1.92857143, 1.85714286, 1.5 , 1.5 , 1. , 1.85714286, 2.28571429, 2.28571429, 2. , 2.85714286, 1.21428571, 2.14285714, 1.71428571, 1.42857143, 2.64285714, 1.64285714, 1.57142857, 1.64285714, 1.57142857, 1.07142857, 2.07142857, 1.42857143, 2.35714286, 2.42857143, 2.42857143, 2.28571429, 1.85714286, 1.42857143, 1.78571429, 1.64285714, 1.64285714, 1.07142857, 3.71428571, 3.07142857, 2.21428571, 2.14285714, 1.78571429, 2. , 2.14285714, 3.85714286, 1.64285714, 3. , 2.64285714, 1.71428571, 2.78571429, 1.85714286, 3.14285714, 2.42857143, 1.57142857, 1.5 , 2.5 , 3.35714286])

- created_at :

- 2021-11-14T13:33:08.546886

- arviz_version :

- 0.11.4

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- modeling_interface :

- bambi

- modeling_interface_version :

- 0.6.3

<xarray.Dataset> Dimensions: (drugs_dim_0: 604) Coordinates: * drugs_dim_0 (drugs_dim_0) int64 0 1 2 3 4 5 6 ... 598 599 600 601 602 603 Data variables: drugs (drugs_dim_0) float64 1.857 3.071 1.571 2.214 ... 1.5 2.5 3.357 Attributes: created_at: 2021-11-14T13:33:08.546886 arviz_version: 0.11.4 inference_library: pymc3 inference_library_version: 3.11.2 modeling_interface: bambi modeling_interface_version: 0.6.3xarray.Dataset -

- chain: 2

- draw: 500

- drugs_dim_0: 604

- chain(chain)int640 1

array([0, 1])

- draw(draw)int640 1 2 3 4 5 ... 495 496 497 498 499

array([ 0, 1, 2, ..., 497, 498, 499])

- drugs_dim_0(drugs_dim_0)int640 1 2 3 4 5 ... 599 600 601 602 603

array([ 0, 1, 2, ..., 601, 602, 603])

- drugs(chain, draw, drugs_dim_0)float644.075 2.024 1.564 ... 3.012 2.02

array([[[ 4.07495364, 2.02352125, 1.56448466, ..., 1.44583217, 2.06317646, 2.62798456], [ 3.03989998, 2.16458743, 1.45476258, ..., 1.18123592, 2.36766786, 2.60105243], [ 3.01395118, 2.15651612, 3.23289618, ..., 1.89838107, 1.95977696, 3.2307726 ], ..., [ 2.18756172, 2.64599313, -0.07792109, ..., 2.50611035, 2.23948013, 1.79012574], [ 0.79325421, 2.03326936, 1.38409235, ..., 1.7566411 , 2.575434 , 2.76585376], [ 2.15659437, 1.51732652, 1.85295982, ..., 1.06985111, 2.03185276, 2.74224068]], [[ 1.55248714, 1.75049538, 1.90759942, ..., 2.43289733, 3.33927732, 2.73945313], [ 1.81840995, 2.40955922, 2.02980709, ..., 2.16046626, 2.65509962, 2.58816811], [ 2.03793885, 2.60644742, 1.90805772, ..., 2.26521481, 2.92107106, 1.58612213], ..., [ 1.84406812, 2.99358547, 1.49338028, ..., 2.57502811, 3.0784021 , 1.59368704], [ 2.85843259, 2.43540776, 0.75525064, ..., 1.95372932, 2.34459914, 2.20889817], [ 2.73364228, 2.69281642, 2.79221731, ..., 1.0002491 , 3.01181529, 2.01982985]]])

- created_at :

- 2021-11-14T13:33:13.565869

- arviz_version :

- 0.11.4

- modeling_interface :

- bambi

- modeling_interface_version :

- 0.6.3

<xarray.Dataset> Dimensions: (chain: 2, draw: 500, drugs_dim_0: 604) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 493 494 495 496 497 498 499 * drugs_dim_0 (drugs_dim_0) int64 0 1 2 3 4 5 6 ... 598 599 600 601 602 603 Data variables: drugs (chain, draw, drugs_dim_0) float64 4.075 2.024 ... 3.012 2.02 Attributes: created_at: 2021-11-14T13:33:13.565869 arviz_version: 0.11.4 modeling_interface: bambi modeling_interface_version: 0.6.3xarray.Dataset

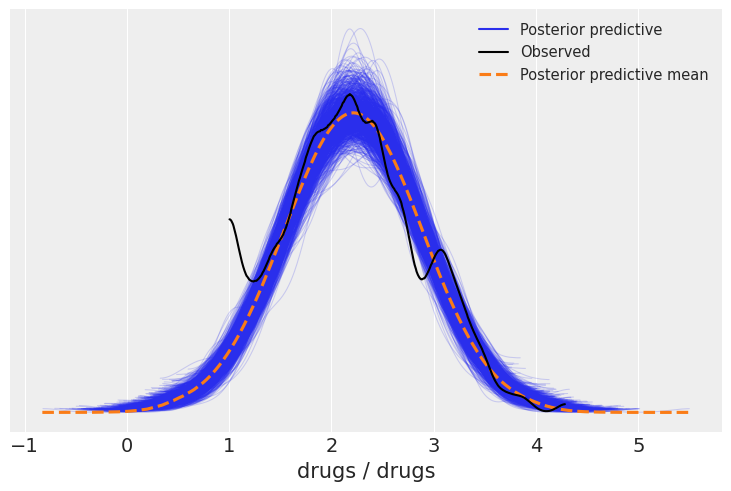

One use of the posterior predictive is as a diagnostic tool, shown below using az.plot_ppc.The blue lines represent the posterior predictive distribution estimates, and the black line represents the observed data. Our posterior predictions seems perform an adequately good job representing the observed data in all regions except near the value of 1, where the observed data and posterior estimates diverge.

[26]:

az.plot_ppc(fitted);

[27]:

%load_ext watermark

%watermark -n -u -v -iv -w

Last updated: Sun Nov 14 2021

Python implementation: CPython

Python version : 3.8.5

IPython version : 7.18.1

numpy : 1.21.2

arviz : 0.11.4

bambi : 0.6.3

pandas : 1.3.1

statsmodels: 0.12.2

matplotlib : 3.4.3

sys : 3.8.5 (default, Sep 4 2020, 07:30:14)

[GCC 7.3.0]

Watermark: 2.1.0